Traffic is steady. Demand is live.

And yet a publisher’s programmatic revenue falls off a cliff overnight.

No bans. No algo changes. Nothing obvious.

The culprit? One error in ads.txt.

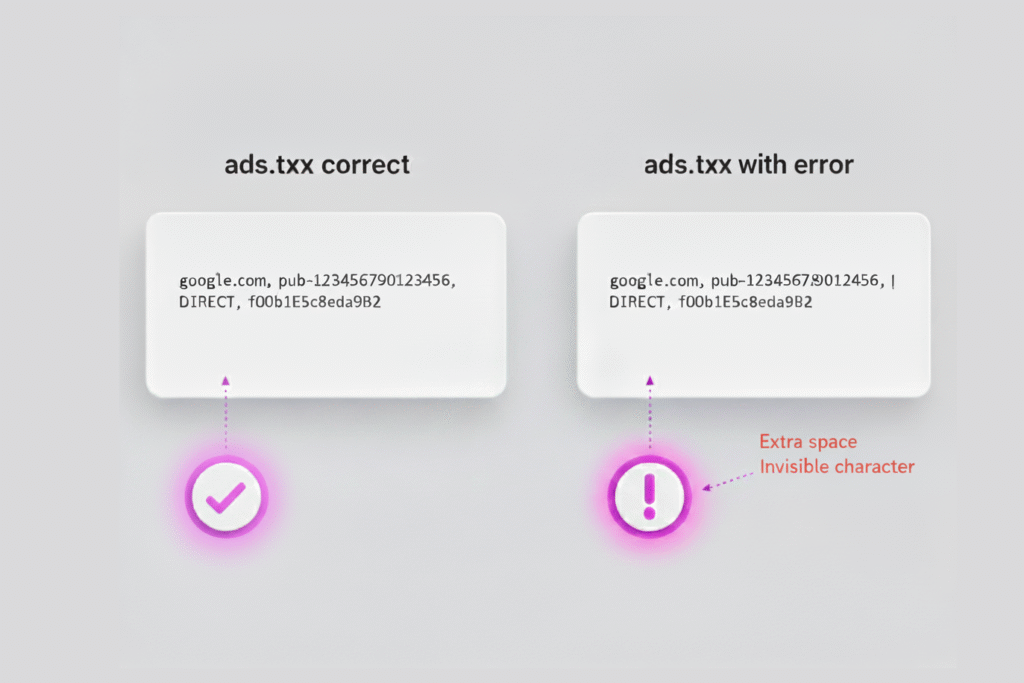

We saw it just last week: an extra space in a domain entry. One invisible character cut the publisher off from major demand sources. Revenue leaked every day until the issue was found.

This isn’t rare. Between 6% and 12% of ads.txt files fail to load for crawlers.

That means real publishers, with real traffic, get zero bids because their authorization file doesn’t validate.

The reality is simple:

- DSPs and exchanges only buy from authorized sellers

- No valid ads.txt = no authorization

- No authorization = no revenue

Why ads.txt errors are so common

Ads.txt was introduced in 2017 to combat ad fraud and improve supply chain transparency. The concept is straightforward: publishers list their authorized sellers in a publicly accessible text file.

Simple in theory. Messy in practice.

Here’s what we see breaking most often:

Syntax errors. Extra spaces, missing commas, incorrect line breaks. Text editors sometimes add invisible characters. Copy-pasting from PDFs or emails introduces formatting issues. A single misplaced character can invalidate an entire entry.

Outdated entries. Publishers add new partners but forget to remove old ones. Or they update a partnership but leave the deprecated domain in place. The file becomes a historical record rather than an accurate authorization list.

Subdomain mismatches. A publisher’s ads.txt lives at example.com/ads.txt, but their ad requests come from www.example.com or m.example.com. Crawlers can’t always resolve these variations correctly.

Server configuration problems. The file returns a 404 error, redirects incorrectly, or gets blocked by security settings. From the outside, it looks like the publisher doesn’t have an ads.txt file at all.

Case sensitivity issues. Some exchanges require exact domain capitalization. Others don’t. A mismatch means the entry doesn’t match during validation, even though it looks correct to the human eye.

Most of these issues aren’t visible without technical inspection. Publishers check the file in their browser, see it loads fine, and assume everything works. But crawlers see something different.

What happens when ads.txt fails validation

The consequences aren’t gradual. They’re immediate.

When a DSP or exchange crawls your ads.txt and finds errors, your inventory gets flagged. Depending on the buyer’s policy, one of two things happens:

Hard block. The buyer stops bidding entirely. Your impressions never enter their auction. Fill rate drops. Revenue disappears.

Soft block. The buyer lowers bid density or applies heavy discounts. You still get some bids, but CPMs collapse because the inventory is treated as unauthorized or risky.

Either way, you lose revenue. And because most publishers don’t monitor ads.txt validation in real time, the problem can persist for days or weeks before anyone notices.

Here’s what that looks like in practice:

A mid-sized content site averages $8,000 per day in programmatic revenue. One Friday afternoon, someone updates ads.txt to add a new partner. They introduce a formatting error. By Monday morning, revenue is down 40%. By Wednesday, it’s down 60%. The team spends two days troubleshooting ad server configs, checking for bot traffic, reviewing demand partner dashboards. Nothing explains the drop.

Finally, someone checks ads.txt validation. The file returns a parsing error. One fix later, revenue starts recovering. But four days of losses can’t be recovered. At 50% average impact, that’s $16,000 gone.

How sellers.json fits into this

Ads.txt authorizes which companies can sell a publisher’s inventory. Sellers.json identifies who those companies are.

The two files work together. If your ads.txt entry points to a seller ID that doesn’t exist in the corresponding sellers.json, buyers treat it as invalid. Same outcome: fewer bids, lower revenue.

We see this frequently with reseller relationships. A publisher adds an SSP’s ads.txt line, but the SSP hasn’t updated their sellers.json to include that publisher’s account ID. From the publisher’s side, everything looks correct. From the buyer’s side, the chain breaks.

Sellers.json also matters for transparency mandates. Buyers increasingly filter inventory based on whether the full supply chain is disclosed. If any link in the chain is missing from sellers.json, some buyers won’t bid at all.

What we built to solve this

At BidFuse, we see ads.txt and sellers.json issues every week in publisher audits. Most are fixable in minutes, but only if you catch them.

That’s why we built our own internal SPO validator. It runs continuous checks across three layers:

Syntax validation. The tool parses ads.txt files line by line, flagging formatting errors, invisible characters, and structural issues that would cause crawlers to fail.

Cross-reference checks. It verifies that every ads.txt entry has a corresponding valid entry in the relevant sellers.json file. If the chain breaks anywhere, we flag it immediately.

Crawl simulation. The validator mimics how DSPs and exchanges actually fetch and parse ads.txt. If there’s a redirect, a server error, or a subdomain mismatch, we catch it before buyers do.

This isn’t something we sell as a standalone product. It’s part of how we onboard and monitor every publisher relationship. Before a publisher goes live, we validate their setup. After they’re live, we monitor continuously.

When an issue appears, we alert the publisher and provide the exact fix needed. No guesswork. No multi-day troubleshooting cycles.

Why this matters for SPO

Supply Path Optimization is supposed to create cleaner, more transparent routes between buyers and sellers.

But SPO only works if the foundational authorization layers are correct. If ads.txt breaks, it doesn’t matter how clean your supply path is. Buyers won’t bid.

That’s why we treat ads.txt and sellers.json validation as non-negotiable infrastructure. You can’t optimize a path that isn’t authorized in the first place.

The publishers who take this seriously see measurable impact:

- Faster onboarding with new demand partners

- Higher fill rates because authorization never breaks

- Better CPMs because buyers trust the inventory source

- Less time spent troubleshooting mystery revenue drops

The ones who ignore it lose revenue to invisible errors they don’t even know exist.

When was the last time you checked yours?

If you can’t remember, check now.

Load your ads.txt file in a browser. Then run it through a validation tool. If you don’t have one, use any of the free ads.txt validators available online. Compare the results.

Look for:

- Lines that produce parsing errors

- Domains with extra spaces or odd characters

- Entries that reference outdated or deprecated seller IDs

- Missing entries for active demand partners

- Server response codes that aren’t 200

Then cross-check sellers.json. Make sure every entity listed in your ads.txt has a corresponding entry in the relevant sellers.json file.

If you find issues, fix them immediately. The cost of neglect compounds daily.

And if you’re onboarding with a new monetization partner, ask them how they validate ads.txt and sellers.json. If they don’t have a clear process, that’s a signal.

At BidFuse, this is table stakes. We don’t assume files are correct. We validate them continuously, because in programmatic, authorization isn’t optional.

The fix takes minutes. The neglect costs thousands.